Zero-shot Authenticity: Robust, Training-Free AI-Generated Image Detection

Emirhan Bilgiç†, Aaron Weissberg†, Adrian Popescu

Université Paris-Saclay

†Equal contribution

arXiv link: Coming soon!

Abstract

The rapid proliferation of AI-generated images has necessitated robust and reliable detection methods. In this work, we introduce two novel training-free approaches to identify AI-generated images effectively: Inpainting-based Detection and Non-parametric Patch Search.

1. Inpainting-Based Detection

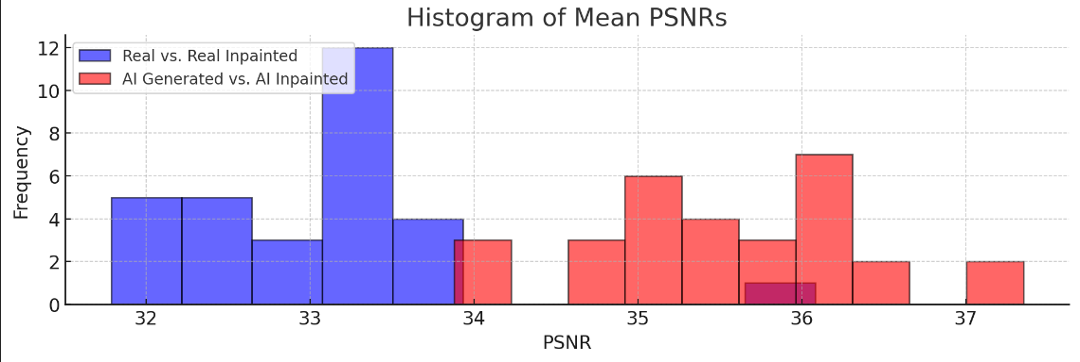

This method employs stable diffusion-based inpainting to assess differences in reconstruction fidelity between real and AI-generated images. Random regions of images are masked, and inpainting is applied to fill the masked areas. The reconstructed regions are then compared to the original images using the Peak Signal-to-Noise Ratio (PSNR) metric. The analysis demonstrates that inpainting performs poorly on real images.

Figure 1. Top left: real image, where inpainting fails. Top right: inpainted real image.

Bottom left: AI-generated image. Bottom right: inpainted AI-generated image.

Figure 2. Histogram of Results.

2. Non-Parametric Patch Search

This method involves building a dataset comprising both real and AI-generated images, from which small patches are extracted. For a given input image, randomly selected patches are compared against the dataset to find the closest matches.

In addition to these detection techniques, we introduce an inpainting-based adversarial attack, designed to challenge and bypass conventional AI-generated image detection models.Our proposed methods are training-free, robust, and adaptable to real-world scenarios, offering a significant step forward in zero-shot detection of AI-generated content.